Creating a simulation environment for robot training is hard, but accelerating asset discovery using USD Search makes it easier.

September 29, 2025

Lightwheel

by Mustafa

How We Are Using NVIDIA USD Search API at Lightwheel

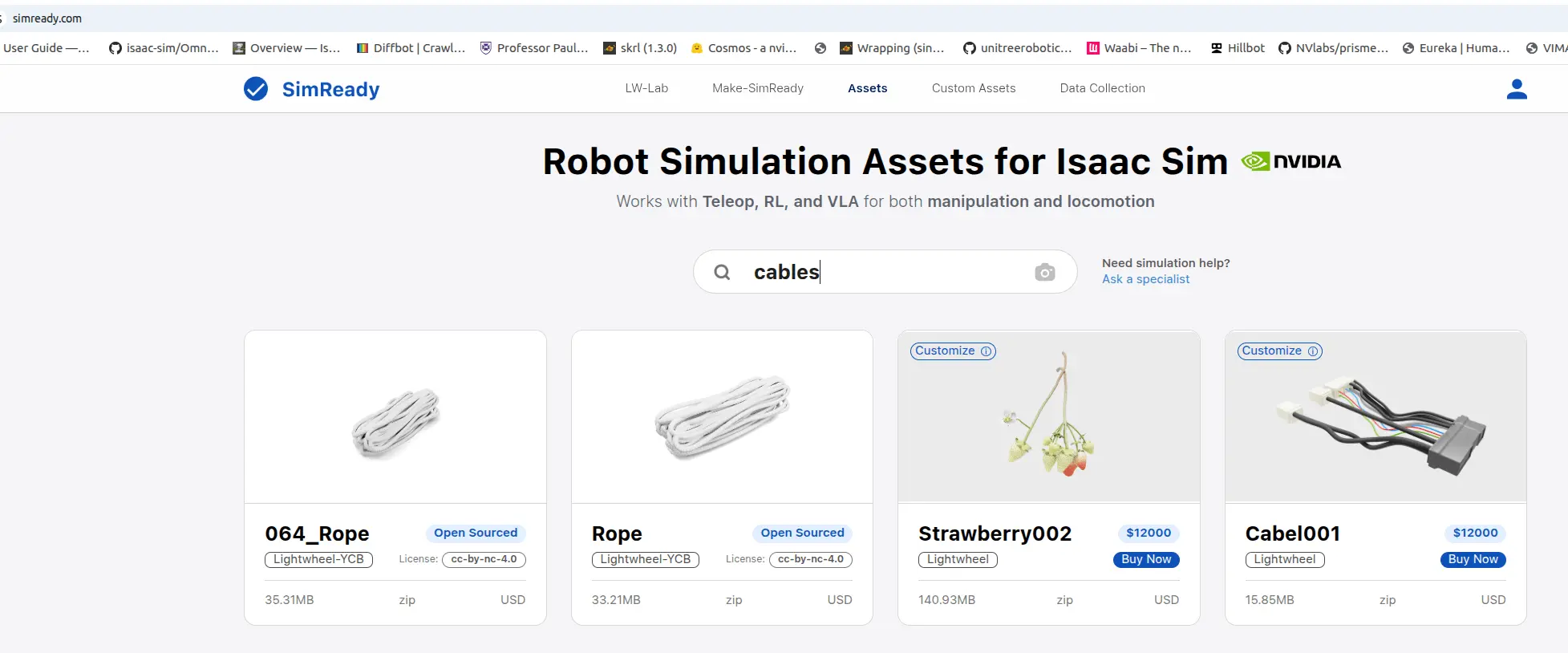

Natural Language + Image Based Search: At Lightwheel, we have implemented NVIDIA USD Search API as the core discovery engine across our platforms, tailoring its multi-modal capabilities to specific user needs. On SimReady.com, USD Search is currently deployed across approximately 2,000 of our highest-quality, most rigorously curated assets specifically designed for robotic manipulation and locomotion. This premium collection includes the foundational objects from the YCB Benchmark, which we have standardized and now offer as Lightwheel-YCB.

Type Based Search: Simultaneously, we leverage USD Search's powerful type-based categorization functionality on Lightwheel.ai. This allows researchers and developers to filter their search specifically within the Lightwheel-YCB dataset. This targeted application ensures that academic and industrial users working with this benchmark can instantly locate the exact standardized objects they need for reproducible experiments, without sifting through our entire broader asset library.

Finding Assets Is The First Challenge in Robotics Simulation

Developing robust robotics simulation environments has become increasingly complex as the field demands higher fidelity, greater realism, and more comprehensive testing scenarios. Engineers and researchers face significant hurdles when assembling the thousands of 3D assets, models, and environments needed for effective simulation. W(we define effective as any variable that is consequential to a sim2real gap). Traditional approaches to asset finding and assembly, such as simulation environment creation that (is asset preparation, training and testing), often create bottlenecks that slow down the training cycles.

At Lightwheel, we understand these challenges intimately. Our platforms, lightwheel.ai and simready.com, serve the robotics simulation community by providing access to high-quality assets and simulation-ready environments. However, we recognized that even the highest quality assets are only valuable if they can be found best asset libraries are only as valuable as them being found. This realization led us to integrate NVIDIA USD Search API technology into our marketplace infrastructure—a decision that has fundamentally transformed how we and our users interact with simulation assets and directly addresses the core difficulty of environment building.

From Traditional Search to Natural Language Flexibility

OpenUSD is an open and extensible ecosystem for 3D worlds. OpenUSD accelerates robot training by providing a unified framework for consolidating geometry, physics, and sensor data into one single source of truth, critical for physically-based simulation needed for robot training and testing.

The Limitations of Conventional Search Methods

Traditional search systems in the 3D asset space typically rely on text-based metadata, filename matching, and basic categorization. Users must know exactly what they're looking for and express it in precise terminology. This approach creates several problems:

Vocabulary barriers: Users may not know the exact technical terms for the objects they need

Limited descriptive capability: Text alone cannot capture the visual and spatial qualities of 3D objects

Time-intensive browsing: Finding suitable assets often requires manual inspection of numerous results

Inconsistent metadata: Asset descriptions may be incomplete or use varying terminology

USD Search: An Efficient, Multi-Modal Solution for Simulation Environments

Considering the limitations provided above, USD Search represents a paradigmatic shift from low accuracy, single-modality to high accuracy, multi-modality search in robotics simulation environments. Built on NVIDIA's NVCLIP technology—a commercial implementation of the Contrastive Language-Image Pre-Training (CLIP) model—USD Search enables three distinct search modalities:

Natural Language Search

Users can search using descriptive, intuitive language. Instead of searching for asset filenames (such as "cylindrical_object_mesh_food_container") users can simply search for "squishy things" or "objects that bounce." This natural language processing capability makes asset finding accessible to users regardless of their technical vocabulary.

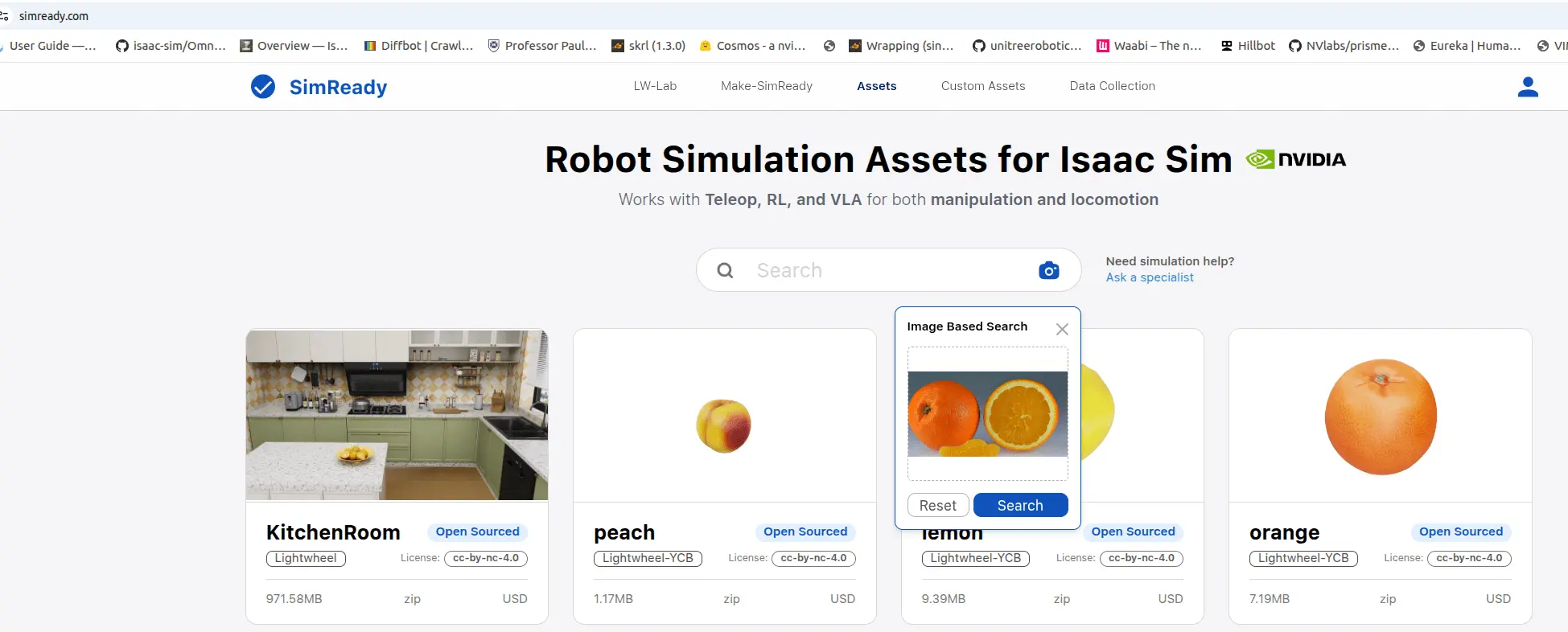

Visual Similarity Search

Perhaps most remarkably, USD Search enables image-to-asset searching. Users can upload a photograph or sketch, and the system identifies visually similar 3D assets. This capability is particularly valuable when users have a specific object in mind but lack the terminology to describe it effectively.

Type-Based Categorization

For structured workflows, USD Search supports precise categorical searching. At Lightwheel, we leverage this functionality extensively for our Lightwheel-YCB benchmark assets, allowing researchers to quickly locate specific standardized objects for their experiments.

The Technical Foundation: NVCLIP and Transformer Architecture

Core Technology

USD Search leverages NVCLIP, NVIDIA's enhanced version of the original CLIP model, which uses a transformer-based architecture to create unified embeddings for both text and images. This architecture enables the system to understand semantic relationships between visual and textual information in a shared embedding space.

Key Technical Specifications

Architecture: Transformer-based with Vision Transformer (ViT) components

Input Types: Text descriptions or RGB images (not simultaneously)

Output Format: Sorted relevance lists with rendered thumbnails and metadata

Performance: 77.86% top-1 accuracy on ImageNet validation (ViT-H-336 model)

Hardware Requirements: NVIDIA Ampere, Hopper, or Lovelace architectures

Training Data: 700 million images from NVIDIA's internal dataset

Advanced 3D Understanding

What sets USD Search apart from general-purpose image search systems is its specific training on 3D asset data and its ability to understand spatial relationships, material properties, and functional characteristics of objects within simulation contexts. This specialized knowledge enables more accurate matching for robotics applications.

Why SimReady.com Chose USD Search

Advantage One: Enhanced User Experience Through Discoverability

Our decision to integrate USD Search was driven by measurable improvements in user engagement, satisfaction and playfulness; the very fun fact of discoverability/finding assets.

Advantage Two: Superior 3D-to-Language Translation

Unlike conventional search services that transform 2D images to text, USD Search demonstrates sophisticated 3D decompression to natural language understanding. This deeper level of intelligence enables more accurate semantic matching between user intent and available assets.

Advantage Three: Seamless Integration with OpenUSD Workflows

As simulation workflows increasingly adopt OpenUSD , USD Search provides native compatibility with USD-formatted assets. This integration eliminates format conversion steps and maintains metadata integrity throughout the asset search, find and implementation process.

Advantage Four: Benchmark Integration and Academic Support

The integration has been particularly valuable for academic users working with standardized datasets. Our implementation of the Lightwheel-YCB benchmark, which includes objects from Yale, CMU, and Berkeley research initiatives, demonstrates how USD Search can support rigorous academic research while maintaining the accessibility needed for broader adoption. The recent completion of the YCB-Benchmark through the Lightwheel SimReady pipeline underscores the critical role of advanced search in making foundational academic resources instantly findable and usable for the entire research community.

Real-World Impact and Use Cases

Use Case One: Robotics Research Applications

Research teams using our platform report significant productivity gains when assembling test scenarios. The ability to search for "objects suitable for grasping practice" or "kitchen items under 500 grams" enables rapid scenario development that would previously require extensive manual curation.

Search: “objects suitable for grasping practice”

Search: “kitchen items under 500 grams”

Use Case Two: Industrial Simulation Development

Manufacturing simulation teams leverage the visual search capability to quickly locate assets that match real-world production environments. By uploading photographs from factory floors, engineers can identify simulation-ready equivalents for testing and validation.

Use Case Three: Educational and Training Programs

Academic institutions use the natural language search to help students and researchers find appropriate assets without requiring deep familiarity with technical taxonomies. This accessibility has lowered the barrier to entry for robotics simulation education.

Technical Implementation and Performance

Infrastructure Requirements

Implementing USD Search requires careful consideration of hardware and software requirements:

Runtime Environment: TensorRT for optimized inference

GPU Requirements: NVIDIA Ampere generation or newer

Operating System: Linux-based deployment

Model Specifications: NVCLIP ViT-H with 224-pixel resolution

Performance Metrics

Our deployment shows consistent sub-second response times for both text and image queries, with relevance rankings that improve over time through user interaction feedback. The system is expected to process 10,000 queries daily across our platform with minimal performance degradation.

Future Implications and Industry Impact

Evolving Simulation Workflows

The success of multi-modal search in our marketplace suggests broader implications for how simulation environments will be developed and maintained. As asset libraries grow larger and more diverse, intelligent search mechanisms become essential infrastructure rather than convenient features.

Standardization and Interoperability

USD Search's native support for OpenUSD content promotes standardization across the robotics simulation ecosystem. This standardization facilitates better collaboration between research institutions, commercial developers, and platform providers.

Accessibility and Democratization

By reducing the technical barriers to asset search, USD Search contributes to the democratization of robotics simulation. Smaller research teams and educational institutions can now access the same sophisticated asset libraries that were previously practical only for large, well-resourced organizations.

Conclusion

The integration of USD Search into our simulation marketplace represents more than a technological upgrade—it embodies a fundamental shift toward more intuitive, intelligent, and accessible robotics simulation development. By enabling natural language queries, visual similarity matching, and precise categorical searches, USD Search has transformed how our users search, load and assemble simulation assets, directly tackling the hardest parts of building simulation environments.

As the robotics industry continues to rely more heavily on simulation for development, testing, and validation, the tools that support these workflows must evolve to match increasing demands for efficiency and capability. USD Search demonstrates that artificial intelligence can meaningfully enhance the simulation development process, not by replacing human expertise, but by augmenting human capability and removing unnecessary friction from creative and technical workflows.

The success we've observed at Lightwheel through Lightwheel.ai and www.simready.com suggests that lnatural language search represents the future of asset search in technical domains. As this technology continues to mature, we anticipate even more sophisticated capabilities that will further accelerate robotics research and development across academia and industry.

USD Search is available through NVIDIA's Omniverse commercial licensing program. Lightwheel's implementation demonstrates the practical benefits of integrating advanced AI search capabilities into simulation workflows. For more information about our platform and asset marketplace, visit lightwheel.ai and simready.com