From Overnight to Under an Hour: Validating NVIDIA

Isaac Lab-Arena's GPU-Accelerated Evaluation

Physical AI evaluation is no longer a downstream validation step—it is a central mechanism for guiding data collection, model design, and learning itself. Foundation models have outgrown isolated demos and narrow benchmarks. Rigorous, scalable evaluation infrastructure has become the critical bottleneck.

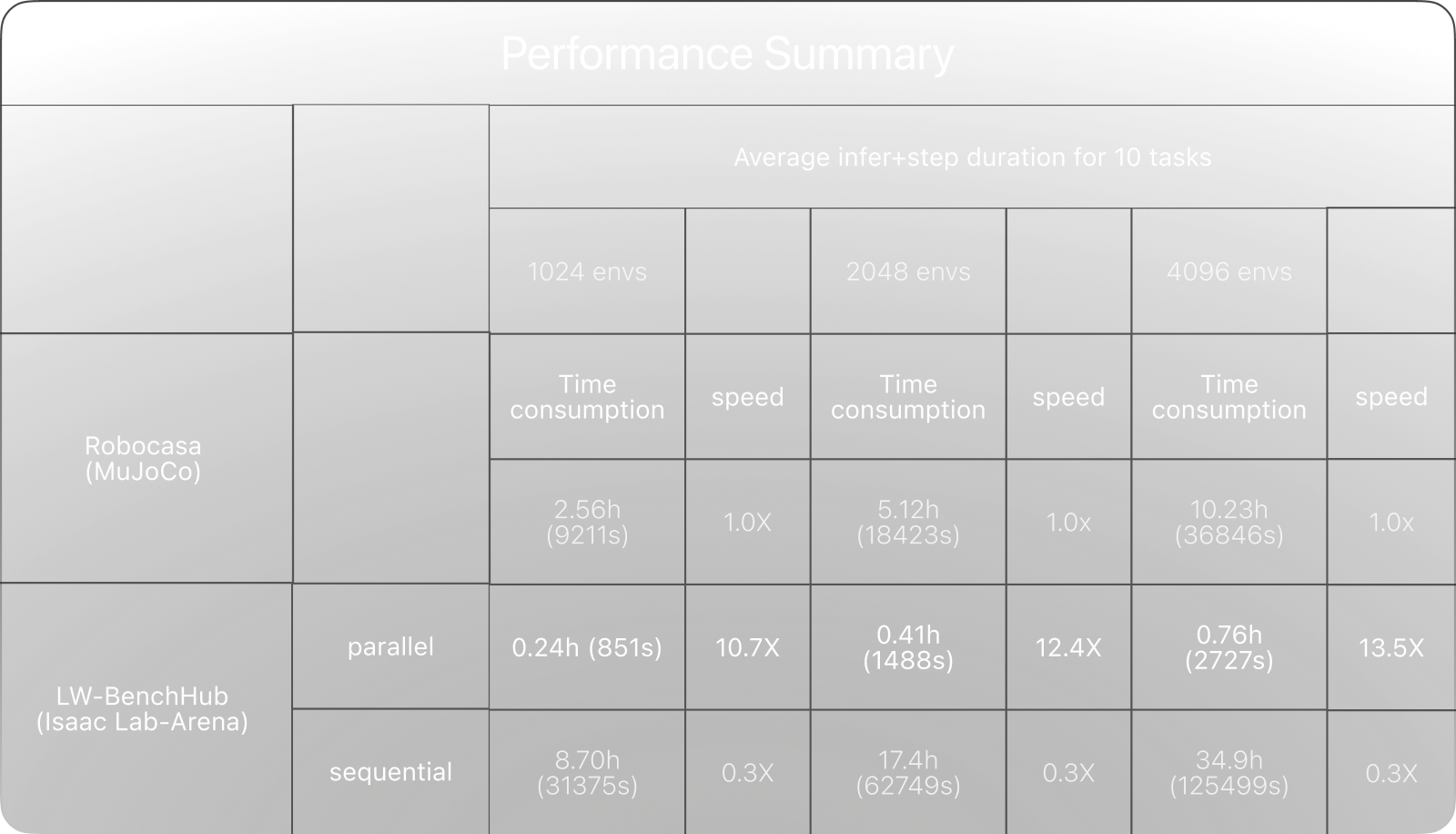

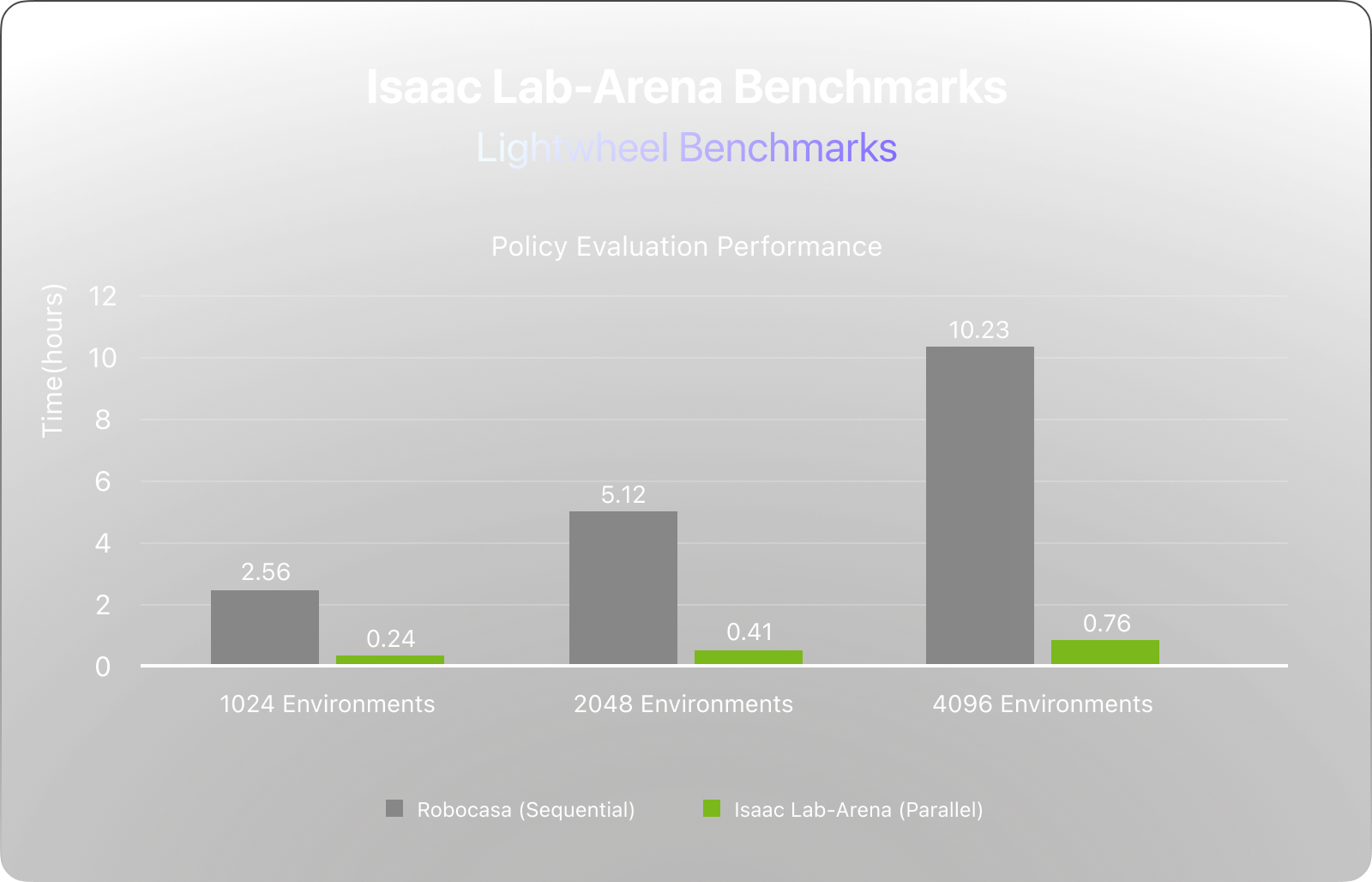

NVIDIA Isaac Lab - Arenais an open-source framework for large-scale policy evaluation, featuring GPU-accelerated parallel evaluation that could transform policy evaluation workflows. Today, we are sharing comprehensive benchmark results that validate those claims: Isaac Lab-Arena achieves up to 13.5× faster policy evaluation compared to sequential execution, reducing evaluation time from over 10 hours to under 1 hour for complex manipulation tasks.

This performance gain is not theoretical, and it directly powers RoboFinals, our industry-grade evaluation platform already adopted by leading foundation model teams. More broadly, it demonstrates how GPU-accelerated parallelism can unlock evaluation at the scale and speed required for modern physical AI development.

The Evaluation Challenge:

Speed Meets Scale

As generalist robot policies emerge, evaluation complexity grows rapidly. A single policy must be tested across thousands of combinations of tasks, objects, scenes, robots, and physical parameters.

Running these evaluations sequentially doesn’t scale. Waiting hours or days for results slows iteration and makes thorough testing impractical.

Isaac Lab-Arena addresses this with GPU-accelerated, parallel evaluation, enabling developers to benchmark policies at scale and iterate faster with confidence.

Benchmark Setup

We conducted comprehensive performance benchmarks comparing Lightwheel-RoboCasa-Tasks built on Isaac Lab - Arena against the widely-used RoboCasa Benchmark built on Robosuite and MuJoCo simulation stack. The test was designed to isolate performance differences primarily in parallel simulation and runtime infrastructure, not the evaluation method or policy.

*For detailed benchmark methodology and raw data, see ourtechnical documentation*.

Test Configuration

We executed 10 complex and long-horizon manipulation tasks from the RoboCasa benchmark (PrepareCoffee, QuickThaw, SteamInMicrowave, etc.) using the NVIDIA GR00T N1.5 policy on a Panda-Omron robot. Each task ran 200-step rollouts across 1,024 / 2,048 / 4,096 parallel environments on 8× NVIDIA RTX 6000D GPUs.

Controlled Comparison

We tested three configurations to cleanly isolate performance factors:

concurrently on GPUs

(the target configuration)

sequential execution

(to isolate parallelization benefit)

sequential execution

(industry baseline)

All three configurations ran the same tasks, same number of episodes, same policy inference—ensuring throughput differences came purely from the simulator and runtime stack, not from task complexity or policy behavior.

Results: Parallelism Delivers at Scale

Key Findings

- 1,024 envs: 10.7× faster than MuJoCo

- 2,048 envs: 12.4× faster than MuJoCo

- 4,096 envs: 13.5× faster than MuJoCo

A critical insight emerges when comparing Isaac Lab - Arena's sequential and parallel modes. When running sequentially, Isaac Lab - Arena actually takes 34.9 hours versus MuJoCo's 10.2 hours for the same workload.

Why? Because the Isaac Lab - Arena version of the RoboCasa Tasks uses higher-fidelity assets with refined collision geometry, improved contact surfaces, and enhanced materials/textures—all validated through teleoperation to ensure realistic interactions. This fidelity costs compute when running sequentially.

However, comparing Isaac Lab - Arena sequential (34.9h) to Isaac Lab - Arena parallel (0.76h) shows a 46× speedup from parallelization alone.

This cleanly demonstrates that the throughput gain comes entirely from GPU-accelerated parallelism, not from cutting corners on simulation quality. In fact, you're getting higher-fidelity simulation at 13× faster throughput—the best of both worlds.

What This Means for Foundation

Model Evaluation

Transforming Development Workflows

The practical impact on development workflows is significant:

Sequential evaluation workflow:

- Submit evaluation job

- Wait for results (hours to overnight depending on scale)

- Review and iterate

- Limited daily iterations

GPU-accelerated parallel workflow:

- Submit evaluation job

- Results ready in under an hour

- Rapid iteration cycles

- Multiple evaluation runs per day

For teams training large foundation models, this acceleration enables more thorough exploration of model architectures, data compositions, and hyperparameters within the

same development timeline.

Enabling Larger-Scale Evaluation

These benchmarks tested homogeneous parallelism for 4,096 environments with only

object positions varying. The real power will emerge with heterogeneous parallelism

(different objects per parallel environment) in version 0.2 of Isaac Lab - Arena coming

soon.

The ability to test across truly diverse scenarios in parallel will be transformative for

robust policy development. This scaling is exactly why Isaac Lab - Arena forms the

foundation of RoboFinals : as evaluation requirements grow from hundreds to

thousands of variations, GPU-accelerated parallelism becomes not just helpful, but

essential.

How This Powers RoboFinals

How Isaac Lab - Arena's performance enables RoboFinals:

- 13.5× speedup means multiple evaluation iterations per day instead of overnight

waits - Thousands of task variations evaluated simultaneously via GPU parallelism

- Cross-robot and multi-physics validation without multiplying evaluation time

this infrastructure to rapidly iterate and measure capability gains beyond academic

benchmarks.

This is the bridge from infrastructure to impact: Isaac Lab - Arena provides the scalable foundation, RoboFinals delivers the evaluation platform, and frontier labs gain the

ability to evaluate at the speed of model development.

Get Started

Isaac Lab-Arena

on GitHub

of Isaac Lab-Arena

early access

for comprehensive evaluation